How have scientists overcome the ethical privacy crisis in brain chips?

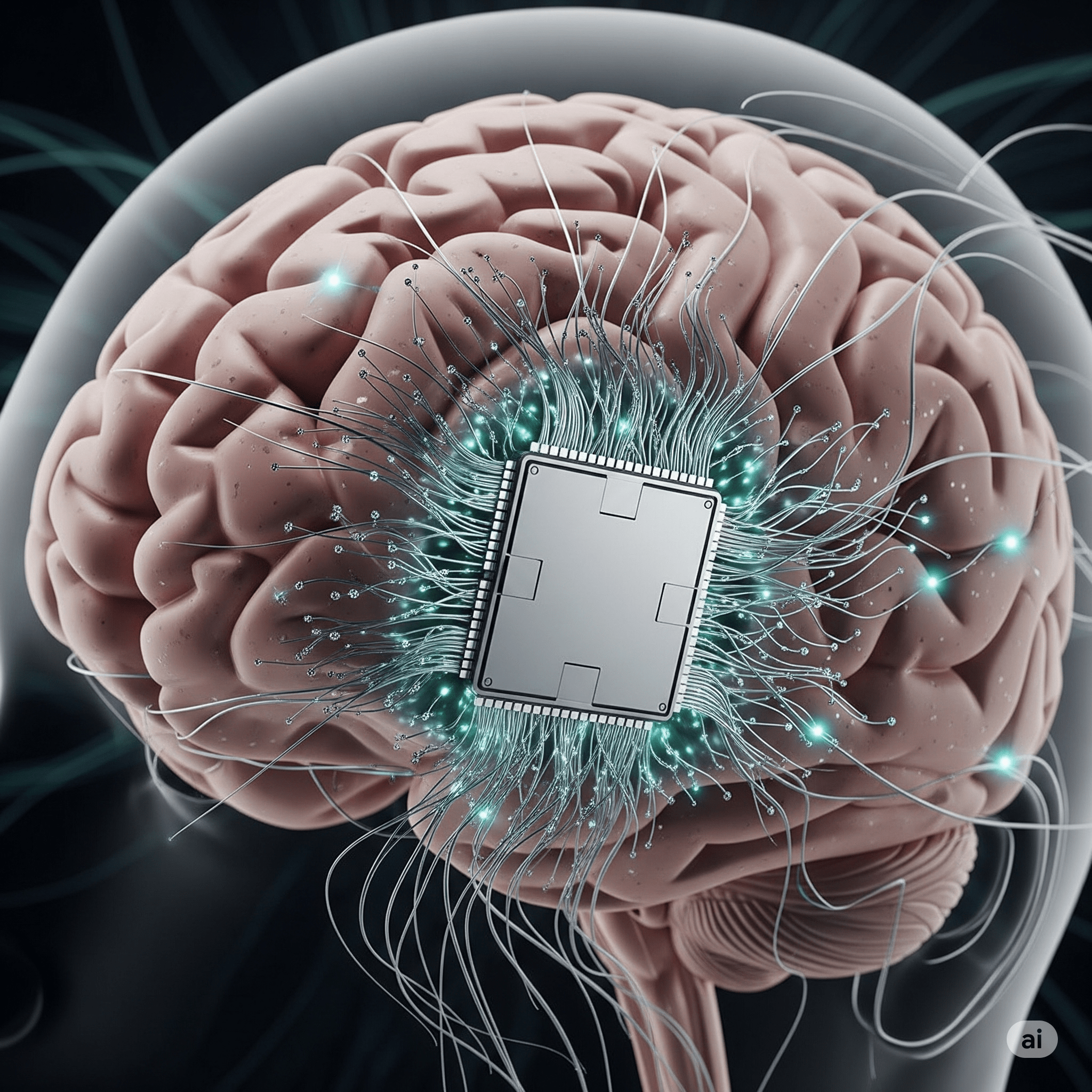

Scientists are tackling significant ethical challenges related to brain chips, devices designed to help people with motor disabilities or those who have lost their voices communicate. A core issue has been that these chips cannot differentiate between private thoughts and those intended for communication.

According to a report by Ars Technica, a Stanford research team has developed an innovative solution to safeguard users’ mental privacy. They implemented brain privacy barriers, programmed directly into the chip, ensuring sensitive thoughts remain confidential.

Benjamin Mischidi Abramovich Krasa, a neuroscientist at Stanford and one of the study’s leaders, explains that both internal thoughts and spoken speech originate in the same brain region. Initially, the study aimed to convert the user’s intended speech into audible sounds via the brain chips. However, this proved challenging for patients with ALS or quadriplegia, for whom even attempting speech is difficult.

The researchers shifted focus to converting internal thoughts and inner speech into audible sounds, creating a new challenge: maintaining privacy. Their solution involves a pre-programmed password in the chip. Before starting to vocalize thoughts, patients think of this password, allowing the AI to filter and decode signals appropriately.

The system has shown remarkable results, accurately recognizing passwords in up to 98% of cases. Yet, as the number of programmed words increased, accuracy dropped to about 74%, prompting the team to create an alternative method. This new approach allows patients to designate thoughts as private simply by thinking of them as “silent,” enabling the AI to maintain the secrecy of those ideas.

This breakthrough marks a major advancement in neurotechnology, balancing the power of brain-computer interfaces with the fundamental right to mental privacy.