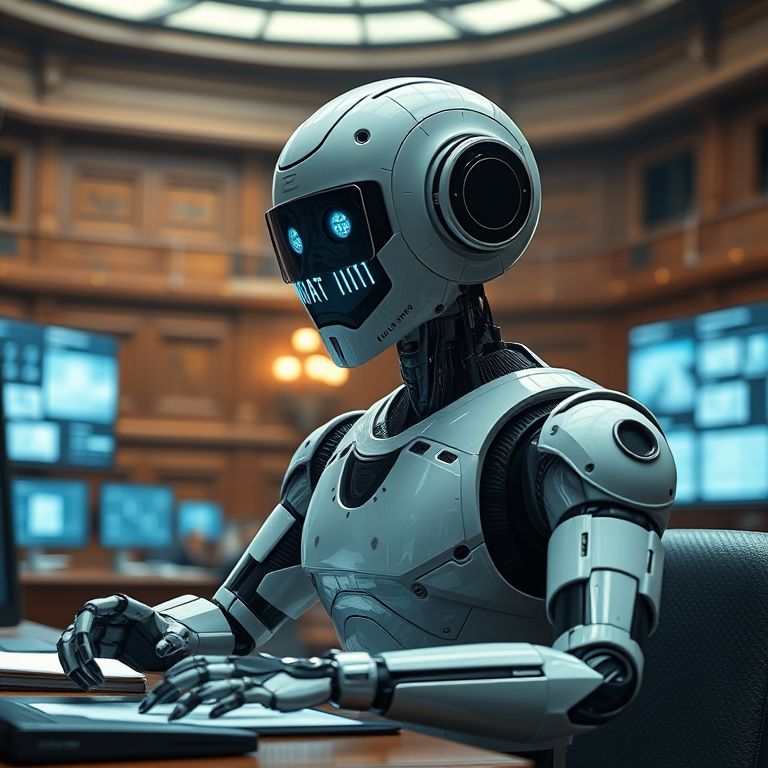

The World’s First AI Minister Appointed to Fight Human Corruption

In the battle against corruption, a new kind of minister has entered the political stage—one that cannot be bribed, swayed by personal agendas, or intimidated by threats.

Meet Deela, the world’s first AI-powered government minister, now serving in Albania. Tasked with overseeing public procurement, Deela is designed to ensure government contracts are awarded without corruption or favoritism, according to AndroidHeadlines via Al Arabiya Business.

Named after the Albanian word for “sun,” Deela represents both a symbolic and practical step toward greater transparency and fairness in governance.

A Bold Leap Into Algorithmic Governance:

Prime Minister Edi Rama introduced Deela as the first-ever cabinet member who isn’t physically present. The logic is straightforward: if human error and bias are the biggest obstacles to fair tenders, why not remove humans from the process altogether?

AI can analyze data, follow rules, and make merit-based decisions without being influenced by personal relationships, political favors, or pressure.

Deela originally launched as a virtual assistant helping citizens access government documents, but its new role is much broader—and potentially transformative.

A Global Ripple Effect:

While some Albanians online remain skeptical, the idea of an AI minister carries undeniable appeal. If Deela succeeds, it could spark a quiet revolution in governance.

Imagine AI ministers serving as financial regulators in one country, city planners in another, or aid distribution managers elsewhere—all evaluated, hired, or even “fired” based on measurable performance.

Such initiatives wouldn’t replace human leaders but could offload tasks most vulnerable to corruption and human error, freeing politicians to focus on complex, human-centered issues requiring empathy and creativity.

The Potential Dark Side:

Yet risks remain. An AI minister requires ironclad safeguards. While algorithms can’t be bribed, they can still be manipulated, biased, or misused if security is weak.

History already shows examples of AI systems unintentionally favoring certain political or social groups when guardrails weren’t in place. Such risks could undermine the very purpose of introducing AI into governance.